DeepSeek has within a few weeks sent Nvidia’s share price tumbling. The AI-Aware tech team, Pravija Sandeep, Daryl Ramdin, Syed Huq, and I created a DeepSeek-v3 dataset and took the untrained AI-Aware system for a spin and it works surprisingly well. Questions have been raised whether the model has been distilled from ChatGPT (Criddle and Olcott 2025).

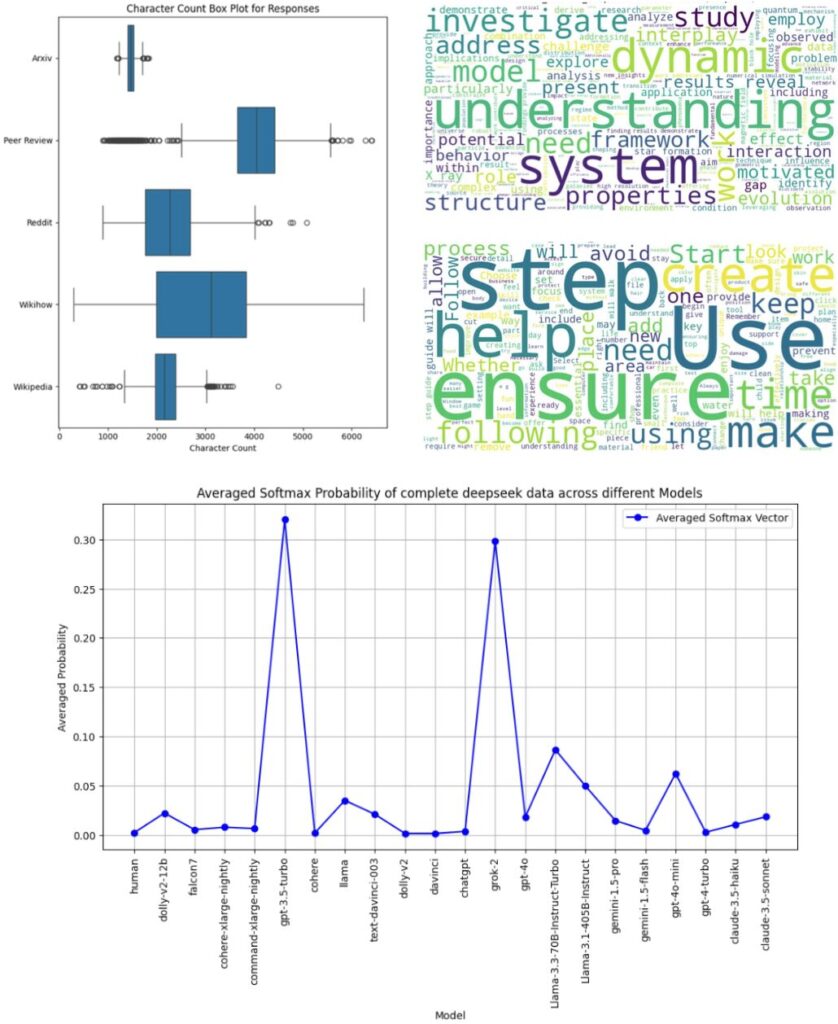

Test data: We generated a dataset similar to M4 (Wang et al 2024) with different text types in English. We used DeepSeek-v3 with our M4 style prompts and applied cleaning similar to Wang et al (2024). The texts created by DeepSeek have considerable variation in length within and between the types and also differ in style (see below). The data is available: https://lnkd.in/e-Wxz6Th

AI Detection: The AI-Aware system has not been trained or tuned with DeepSeek data and detected 5999 items out the 6000 samples correctly i.e. 99.983%. It is similar to our detection rate for human text (99.79%) and much higher than we expect for a new model. However, for comparison we tested two other recent models, Qwen 2.5-72B-instruct and WizardLM-2-8x22B, generating smaller M4 style datasets (600 items) with only two text types (PeerRead and Arxiv) where we recorded 100% detection with the AI-Aware system for both models.

AI Generator Detection: The AI-Aware system provides also the probabilities of different generators (currently excluding DeepSeek). Looking at the average probability distribution, GPT3.5-turbo, the model behind the early versions of ChatGPT, has the highest probability, closely followed by Grok-2. We also found that WizardLM 2 (released 4 months before Grok-2) was 98% detected as Grok-2. Qwen 2.5 (released a month after Grok-2) was detected 52% as Grok-2 and 25% as GPT4o-mini.

Conclusion: The findings with the AI-Aware detector show excellent detection rates for DeepSeek and similarities with GPT3.5 and, interestingly, with Grok-2. However, we also see similar results for Qwen-2.5 and WizardLM-2. If the results on DeepSeek-v3 are due to distilling, it seems to be not just from GPT. Others probably use distilling too, and often it is legal to do so. Perhaps multiple generations of distilling are leading to most high-quality LLMs producing increasingly similar text styles.

References

Liu, Feng, Xue, Wang, Wu, Lu et al (2024). Deepseek-V3 Technical Report. arXiv:2412.19437.

Guo, Yang, Zhang, Song, Zhang, Xu et al (2025). DeepSeek-R1: Incentivizing reasoning capability in LLMs via einforcement learning. arXiv:2501.12948.

Wang, Mansurov, Ivanov, Su, Shelmanov, Tsvigun, Whitehouse, et al (2024). M4: Multi-generator, Multi-domain, and Multi-lingual Black-Box Machine-Generated Text Detection. ECACL.

Criddle, C. and Olcott, E. (2025): OpenAI says it has evidence China’s DeepSeek used its model to train competitor. Financial Times, Jan 29. https://on.ft.com/4aDNsrd